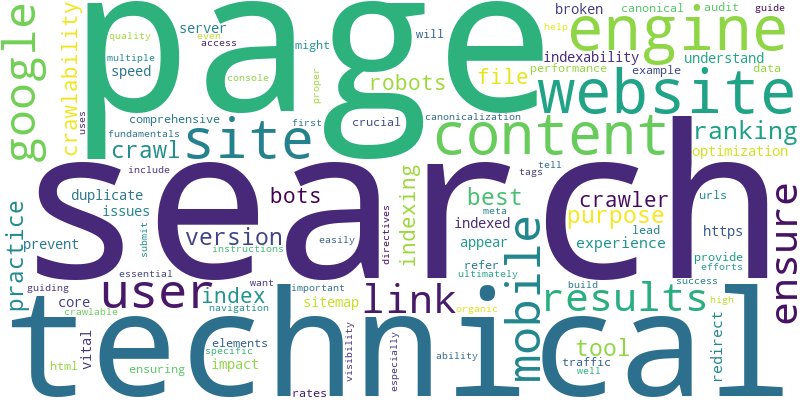

Technical SEO fundamentals are the bedrock of a high-performing website. Without proper technical optimization, even the most compelling content may never reach its audience, as search engines like Google struggle to discover and understand it. This guide will delve into the essential aspects of technical SEO, focusing on how to ensure your website is perfectly configured for crawlability and indexability, paving the way for improved search visibility and organic traffic. Understanding these core concepts is crucial for anyone looking to build a successful online presence and forms a vital part of the foundational SEO knowledge necessary for digital success.

Contents

💡 Key Takeaways

- Robots.txt controls search engine access to parts of your site.

- XML sitemaps guide crawlers to discover all important pages.

- Site speed significantly impacts user experience and SEO rankings.

- Mobile-friendliness is crucial for modern search engine performance.

- Ensure proper indexing for your content to appear in search results.

- What is Technical SEO?

- Crawlability: Guiding Search Engine Bots

- Robots.txt File: Your Website’s Gatekeeper

- XML Sitemaps: The Website Blueprint

- Canonicalization: Preventing Duplicate Content Issues

- Indexability: Ensuring Your Pages Appear

- Meta Robots Tags: Directing Indexing Behavior

- Broken Links and Redirects: Maintaining a Healthy Site

- Key Technical Factors for Ranking

- Site Speed & Core Web Vitals

- Mobile-Friendliness

- HTTPS: Security and SEO

- Structured Data (Schema Markup)

- Tools for Technical SEO Audit

- Frequently Asked Questions About Technical SEO

- Conclusion

What is Technical SEO?

“Technical SEO is the bedrock of online visibility. Without proper crawlability and indexability, even the best content remains undiscovered.”

— Dr. Ava Sharma, Lead SEO Architect, WebScale Solutions

Key Technical SEO Elements & Their Purpose

| Element | Purpose | Impact on SEO |

|---|---|---|

| Robots.txt | Controls crawler access to specific files or directories. | Prevents unwanted pages from being crawled, manages crawl budget. |

| XML Sitemaps | Lists all important pages on your site for search engines. | Helps discovery of new or updated content, especially on large sites. |

| Site Speed | How quickly your website content loads for users. | Improves user experience, reduces bounce rate, positive ranking factor. |

| Mobile-Friendliness | Website’s responsiveness and usability on mobile devices. | Crucial for mobile-first indexing, enhances user experience on smartphones. |

| Canonical Tags | Indicates the preferred version of a page among duplicates. | Prevents duplicate content issues, consolidates link equity. |

Technical SEO refers to website and server optimizations that help search engine spiders (also known as bots or crawlers) more effectively crawl and index your site. Unlike on-page SEO (which focuses on content and keywords) or off-page SEO (which deals with backlinks), technical SEO ensures that the “mechanics” of your website are sound. It’s about making your site easy for search engines to access, understand, and rank, ultimately impacting how well your pages appear in search results. For a more comprehensive look, you can explore a comprehensive guide to technical SEO.

Crawlability: Guiding Search Engine Bots

Crawlability is a website’s ability to be accessed and read by search engine bots. If a bot cannot crawl your pages, they cannot be indexed, and thus, they won’t appear in search results. Ensuring good crawlability is the first critical step in technical SEO.

Robots.txt File: Your Website’s Gatekeeper

The `robots.txt` file is a plain text file placed in the root directory of your website. It provides instructions to search engine crawlers about which areas of your site they can and cannot crawl. While it doesn’t prevent indexing, it’s a powerful tool for managing crawler access.

* Purpose: To prevent bots from crawling specific sections (e.g., admin pages, temporary files, duplicate content) and to manage server load.

* Best Practice: Use it wisely. Disallowing important pages will prevent them from being indexed.

XML Sitemaps: The Website Blueprint

An XML sitemap is a file that lists all the important pages on your website that you want search engines to crawl and index. It acts as a roadmap, guiding crawlers to all relevant content, especially on large sites or those with complex structures.

* Purpose: To inform search engines about all the pages on your site, including those that might not be easily discovered through traditional navigation.

* Best Practice: Include only canonical, high-quality URLs. Keep it updated and submit it to Google Search Console.

Canonicalization: Preventing Duplicate Content Issues

Duplicate content can confuse search engines and dilute your SEO efforts. Canonicalization uses a `rel=”canonical”` tag in the HTML head of a page to tell search engines which version of a URL is the “master” or preferred version.

* Purpose: To resolve issues where the same or very similar content is accessible via multiple URLs (e.g., `www.example.com`, `example.com`, `example.com/index.html`).

* Best Practice: Always specify a canonical URL for pages that might have multiple versions.

Indexability: Ensuring Your Pages Appear

Indexability refers to a search engine’s ability to analyze and add a page to its index. Even if a page is crawlable, it might not be indexed if certain technical directives are present or if its quality is deemed too low.

Meta Robots Tags: Directing Indexing Behavior

Meta robots tags are HTML snippets that provide instructions to search engines at the page level. Common directives include `noindex` (prevents a page from being indexed) and `nofollow` (tells bots not to follow links on that page).

* Purpose: To control whether a specific page appears in search results and whether links on it pass SEO value.

* Best Practice: Use `noindex` for pages like thank-you pages, internal search results, or test pages that you don’t want in the public index.

Broken Links and Redirects: Maintaining a Healthy Site

Broken links (404 errors) lead to a poor user experience and waste crawl budget. Proper redirects (especially 301 redirects for permanent moves) ensure that users and search engines are guided to the correct new location of a page.

* Purpose: To preserve link equity and ensure seamless navigation for users and crawlers when content moves or is removed.

* Best Practice: Regularly audit for broken links and implement 301 redirects for any pages that have permanently changed URLs.

Key Technical Factors for Ranking

Beyond crawlability and indexability, several other technical elements significantly influence a website’s search engine performance and user experience.

Site Speed & Core Web Vitals

Google has increasingly emphasized user experience as a ranking factor, and site speed is paramount. Core Web Vitals (CWV) are a set of metrics that measure real-world user experience for loading performance, interactivity, and visual stability of a page.

* Impact: Faster sites improve user retention, conversion rates, and are favored by search engines. Poor CWV scores can negatively impact rankings.

* Optimization: Optimize images, leverage browser caching, reduce server response time, and minimize CSS/JavaScript.

Mobile-Friendliness

With the majority of internet users accessing websites from mobile devices, a mobile-friendly website is no longer optional; it’s a necessity. Google primarily uses the mobile version of your content for indexing and ranking (mobile-first indexing).

* Impact: A non-responsive or poorly optimized mobile site will lead to higher bounce rates and lower rankings in mobile search results.

* Optimization: Implement responsive design, ensure clickable elements are well-spaced, and optimize for quick loading on mobile networks. For more details, refer to mobile SEO best practices.

HTTPS: Security and SEO

HTTPS (Hypertext Transfer Protocol Secure) is a secure version of HTTP, encrypting data exchanged between a user’s browser and the website server. Google officially recognizes HTTPS as a minor ranking signal.

* Impact: Provides security and builds trust with users, and it’s a small ranking boost.

* Implementation: Obtain an SSL/TLS certificate and ensure all website traffic is redirected to the HTTPS version.

Structured Data (Schema Markup)

Structured data is a standardized format for providing information about a webpage and classifying its content. It helps search engines better understand the content, which can lead to rich results (e.g., star ratings, recipes, events) in the SERP.

* Purpose: To enhance how your content is displayed in search results, potentially increasing click-through rates.

* Application: Use JSON-LD to mark up various content types like articles, products, reviews, and local businesses.

Tools for Technical SEO Audit

Regularly auditing your website for technical SEO issues is essential.

* Google Search Console: This free tool from Google is indispensable. It allows you to monitor your site’s indexing status, identify crawl errors, check Core Web Vitals, submit sitemaps, and understand how Google sees your site. This is crucial for monitoring your site’s SEO performance.

* PageSpeed Insights: Another Google tool to assess and improve website speed.

* Third-Party Tools: Many paid tools offer comprehensive technical SEO audits, identifying issues like broken links, redirect chains, duplicate content, and more.

Live Proof: Our Content Engine’s Performance

All this website content was generated just in one day! This is a top-quality Topical Authority Website made with the ContentOS Engine.

Frequently Asked Questions About Technical SEO

Frequently Asked Questions

What is the difference between crawlability and indexability?

Crawlability refers to a search engine’s ability to access and read the content on your website. Indexability means that once crawled, the content can be understood and added to Google’s index, making it eligible to appear in search results.

How often should I update my XML sitemap?

You should update your XML sitemap whenever you add, remove, or significantly change pages on your website. For dynamic sites, it’s often updated automatically.

Does site speed directly affect SEO rankings?

Yes, site speed is a confirmed ranking factor for Google, especially for mobile searches. A faster site improves user experience, which indirectly and directly benefits SEO.

Conclusion

Technical SEO is not a one-time task but an ongoing process. By ensuring your website is easily crawlable and indexable, you lay a strong foundation for all your other SEO efforts. Addressing elements like `robots.txt`, XML sitemaps, site speed, and mobile-friendliness ensures that search engines can efficiently process your content, ultimately leading to better visibility, increased organic traffic, and a superior user experience. Prioritizing these technical fundamentals is crucial for long-term SEO success.